In this first of three guest posts on actionable quality data, we invited Matthew Littlefield, President & Principal Analyst at LNS Research, to share his insights on disparate data and actionable quality analytics.

Transforming Disparate Data into Actionable Quality Analytics

by Matthew Littlefield, President & Principal Analyst at LNS Research

“Data” is a word never far from quality professionals’ minds or lips. As the most basic unit of a company’s lifeblood of information, this is probably not surprising. But when most companies are talking about data and its associated challenges, the conversation is usually about more than just its availability.

More often than not, it’s about obtaining the intelligence needed to react to an event or make a decision based on that data. Data and intelligence are two very different things, and the latter requires a different level of technological and process capability than just capture and aggregation.

Luckily there are present-day solutions and future technology trends that focus on these issues. Below we’ll explore how these data challenges have arisen, how market-leading companies are handling them today, and what emerging trends like Big Data and the Internet of Things (IoT) mean for the future of data and intelligence in quality management.

The Difference between Data and Intelligence

Companies collect massive quantities of data. From shop-floor technology like production execution systems or statistical process control up through to ERP and other enterprise software, each modern system is in a state of continual data collection. Raw data from these technologies, however, provides very little value. In order to inform the basis for solid decision-making, it needs to be contextualized for the role of the recipient to provide what everyone is really after: intelligence.

And today, even when data is distilled into clear and actionable pieces of information, it may be relevant and useful for only one job role, as shop-floor workers and plant managers are likely to need different information to successfully perform their jobs. The key to maximizing data is not about availability or capture but rather having capable solutions for delivering the right intelligence to the right people at the right time.

The Challenge of Increasing Data Sources

The challenges around obtaining speedy and actionable quality intelligence are compounded when you consider the fractured quality IT architecture many companies rely on today. Whether caused by growing geographic footprints or the deployment of legacy point solutions from shortsighted IT decisions, these factors contribute to some of the most top-of-mind issues for quality executives.

According to our recent quality management survey completed by over 750 quality executives to date, 47% of respondents felt they had too many disconnected systems and data sources for managing quality. Examples include:

- Disparate data systems and locations

- A lack of standardized processes and reporting

- Stacks of paper that only one set of eyes can view at a time

It’s not difficult to see why so many executives associate the transformation of data into intelligence with difficult to justify ROIs and resource constraints. Today’s leading companies, however, are addressing this challenge by implementing next-generation quality management analytics. Architected to compile data from the shop floor and also easily integrate with other enterprise IT systems and data sources, these solutions leverage business intelligence and visualization technology that provides organizations with the capability to contextualize and monitor quality data at the speed of manufacturing.

The Role of Big Data and IoT in Intelligence Gathering

Big Data, and its promise of high-speed, high-volume analytical capabilities to expose hidden patterns and previously unknown correlations is positioned to dramatically improve the amount of actionable information quality professionals can gather and contextualize. This is in no small part due to the growing use of connected devices and assets facilitated by another overarching technology trend, the IoT.

We expect the IoT to not only accelerate the amount of Big Data collected by manufacturers, but also to lower quality costs in the process. In the not too distant future we could see manufacturers deploying more high-tech in-line testing and sensors (like vision monitoring and process-to-process connectivity) to help create even more granular end-to-end quality processes. This will, of course, not come without its own Big Data and analytical challenges, but manufacturers are already starting to take action.

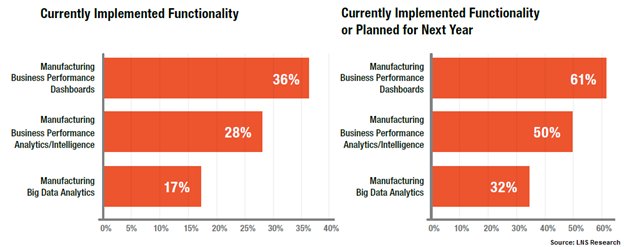

As shown below, 17% of respondents from our MOM survey are already using some type of Big Data analytics in their manufacturing operations, and 32% intend to use Big Data analytics capabilities within the next year.

For quality professionals, this translates to a trend towards increasing amounts of real-time, role-based intelligence that could streamline quality processes and decision-making, and have a profound effect on key quality metrics such as the cost of quality (CoQ), Overall Equipment Effectiveness (OEE), and new product introductions (NPI).